Montreal and Philadelphia Swap Young Strikers

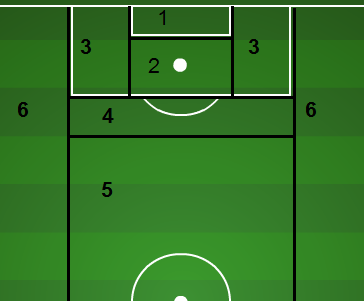

/Okay, I'm sure by now that, given you follow our site, you've also probably been made aware of the fact that the Philadelphia Union (an underrated team in my opinion) traded their young 20-year old striker Jack McInerney to the Montreal Impact for their young 22-year old striker Andrew Wenger. The trade has a very Matt Garza for Delmon Young feel to it, leaving me with an odd taste in my mouth. Are the Montreal Impact selling low on Andrew Wenger? It's, at the very least, presumable that they know something that we don't about him and his nature. The question becomes, then, is that assessment accurate? Obviously the idea of a poacher is one that is met with a bit of contention, in the sense of how do you measure being in the "right place at the right time" for an individual? However assessing the 86 shots taken by 'JackMac' from the 2013 season, we can know that no fewer than 57 of them came from inside the 18 yard box, courtesy of digging around on the MLS Chalkboards. It's obvious that he's a player that can get the ball in advantageous locations. Already on the season he's put together 12 shots and 11 of them have come inside the 18-yard box with 6 coming directly in front of goal. He's been appropriately tagged on twitter as a "fox in the box"---hold the sexual innuendos---and I think the term poacher probably comes naturally with that association. Unfortunately, that term may harbor and imply the idea that he's more lucky than good. I'm not sure I entirely buy that approach.

Meanwhile with everyone's attention directly focused on McInerney--audaciously stamped as 'The American Chicharito'--having already being called in the USMNT Camp for training during the Gold Cup, people are forgetting about Wenger and his potential that once made him a #1 overall MLS draft pick. Back in 2012, Wenger was painted as a potent and rising talent in MLS, named to MLSSoccer.com's 24 under 24 roster, coming in 7th overall. Just one year later McInerney jumped onto the list himself, rocketing to 4th overall, while Wenger was left off. The perpetual "what have you done for me lately?" seemed to come out in these rankings.

Wenger--despite all his talent--has run into a slew of various injury-related setbacks the last two seasons; it's so much failing to perform. The talent is still there, and I fully expect John Hackworth to tinker in an effort to get as much out of him as possible. The easy narrative here might just be the returning home to "revitalize his career" or something like that. Instead I think Philadelphia possibly got an undervalued piece in this move.

Looking at the last two years and a total of 31 shots Wenger has taken, 24 of those came from inside the 18-yard box, a higher percentage than that of JacMac. With that you can see above with xGpSH (expected goals per shot) that Wenger's average shot has been more likely to become a goal than that of his counterpart. Now, understand that this all comes with the requisite small sample sizes admission. Wenger has played less than half the amount of time as McInerney and has less than half the amount of shots. However, estimations based upon their current performances with creating shots has them near the same level as that of Eddie Johnson, Will Bruin and Chris Rolfe in years past.

Creating shots isn't everything. Creating shots in important positions is something. As we attempt to analyze the value of certain events on the pitch and how certain players are responsible for those events, we'll see some things and maybe understand how to assess performances. It's easy to overact to certain things that come with doing this type of analysis--- Such as McInerney, Wenger, Bruin and Rolfe all averaging about 4.0 shots created per game individually. That seems rather important, but there is additional data that is missing. How much was each shot that they created worth? What other attributes do they bring to the match? This is just an simple break down between two players and comparing how they've impacted their respective clubs.

Personally, looking at all of this data, I'm of the mindset that Montreal got the better player. However, it's extremely close and that isn't taking into account the rosters in which they are joining or how they might be utilized on the pitch with their new teams (4-3-3 concerns vs. 4-4-2 placement). I would say at this time the difference between the two is that one is younger and has more experience. That might be a bit simplistic approach but honestly both create shots the same way in the same space. McInerney does so at a higher rate but Wenger has made up for taking less shots with taking advantage of his more experienced partner, Marco Di Vaio, and feeding him opportunities.

This may be one of the more interesting trades in recent memory. I'm fascinated to watch what happens next and how each of these two players develop. Their career arcs will go a long way in providing the narrative for this trade and I'm not so certain that this is as one-sided as some people might think. Referencing baseball again, the Tampa Bay (then, Devil) Rays were largely regarded as having "sold low" on Delmon Young. We can now see, looking over the past decade, that he never managed to put together all those tools that we once believed he had. The lesson being: don't be too quick to judge Philadelphia. This isn't necessarily going to be something as easily evaluated by just a single season, and time will reveal the significance of this day.