Cruising around twitter is about the most social I get nowadays. It sounds nerdy, and really it is, but it's amazing the amount of material that you can discover---not to mention the 140-character conversations you can have---produced by people smarter than me.

Looking around, I stumbled across an article that dates back about 10 days from the site 'Bring On The Stats' by the anonymous author Chase H (aka @chaser_racer32 on twitter). Chase H, goes into a good post about how Sporting Kansas City's goal keeper, Jimmy Neilsen, is---probably gradually---headed for the decline. He comes to this conclusion by going through save% and shots against per minute. A pretty good tactic that has some good reasoning.

"The table above is sorted by save %, which is pretty self-explanatory; it’s the percentage of shots saved by the keeper. Nielsen has the third-worse save % of all goalkeepers with more than 1400 minutes played. The perfect example of why wins and shutouts are not the best measures for a goalkeeper is the fact that Chivas USA keeper Dan Kennedy has saved a higher percentage of shots than Nielsen, and yet has only recorded 2 shutouts, and the team only has 4 wins. Kennedy has the misfortune of playing for one of the worst teams in the MLS, and he has faced almost 50 more shots than Jimmy Nielsen.

On the flip side, one can argue that because the defense plays so well, generally only the most quality shots make it on goal from the opponent. I do acknowledge that is a very big issue to this study, but to compare Neilsen’s stats from last season with the same defense, we see he saved 74% of the shots he faced while the defense conceded almost exactly the same numbers of shots per minute he played."

I'm pretty sure I've seen the analogy of baseball pitchers compared to goal keepers before---if not from some random person or thing I read, then certainly from Matthias. The point of the comparison being that neither the goalkeeper nor the pitcher really has as much influence on goals allowed or runs scored against them as a lot of traditionalists and general fans believe.

In fact, baseball created an individual stat to track exactly what a pitcher controls, and Fangraphs grades him solely on that stat, "FIP." The stat has been well-documented and was introduced to the general public by writers much more skilled than myself.

Back in the early 2000s, research by Voros McCracken revealed that the amount of balls that fall in for hits against pitchers do not correlate well across seasons. In other words, pitchers have little control over balls in play. McCracken outlined a better way to assess a pitcher’s talent level by looking at results a pitcher can control: strikeouts, walks, hit by pitches, and homeruns.

Finding some reading material on FIP today, and thinking about our podcast about the possibility of whether keepers influence shots on target, sparked some thoughts following the article by Chase H.

The idea of keepers being analogous to pitchers is all well and good. There are certainly some similarities. The problem I'm starting to have, though, is that there may be a better way of looking at it. Pitchers, while minimally, still control aspects of their performance such as ground ball and fly ball rates, strikeouts and walks. Keepers potentially could influence opponents psychologically, but truly the only physical effect they have at their disposal, prior to the shot, is their positioning. Positioning frequently corresponds to the defensive placement of a keeper's teammates and the opposition that controls possession.

This isn't the quiet like-to-like thinking that most jump into. However, I started reading about another baseball statistic and it made me think...

One of the differences between UZR and linear weights is that with UZR, the amount of credit that the fielder receives on each play---positive (if he makes an out) or negative (if he allows a hit or an ROE)---depends on how often that particular kind of batted ball, in terms of its location, speed and several other factors, is fielded by an average fielder at the same position. With offensive linear weights, if a batted ball is a hit or an out, the credit that the batter receives is not dependent on where or how hard the ball was hit, or any other parameters.

Maybe, we (and by we, I mean me) are looking at keepers the wrong way. Just like assuming that keepers have control over wins, shutouts and the like, is it any more responsible to assume that goals scored against them are purely their fault either? I'm talking about save percentage here.

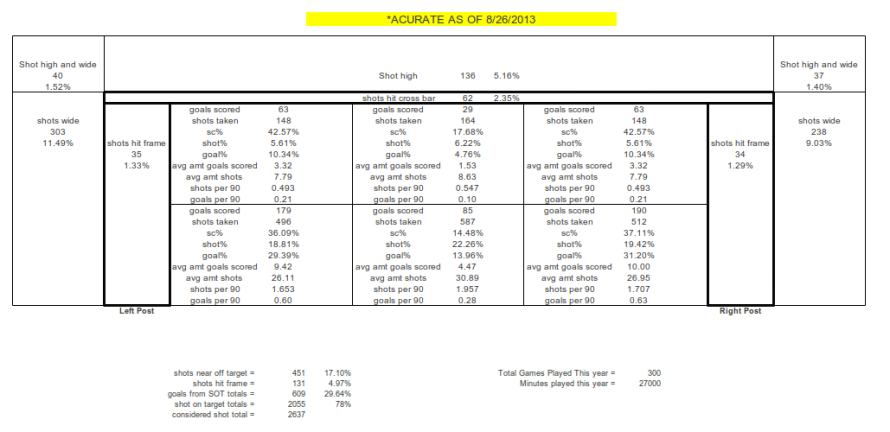

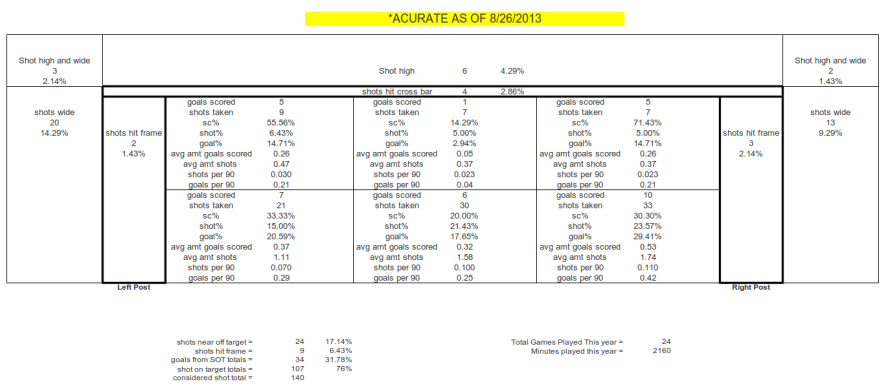

To test this Keeper UZR out, we need to create set of guidelines in the same manner as what has been set out for UZR. There is also the key dependency that we don't have 6 years worth of data to work from. We barely have3 years of chalkboard data, and if using WhoScored or Squawka, we have even less than that.

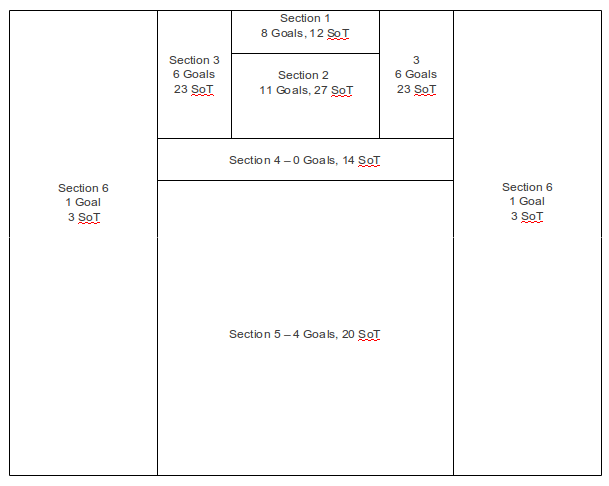

The other problem is that we don't know the speed of the shot, and getting the angle of the shot isn't necessarily easy either. Not that it's particularly important. My goal this week is to take the shot data by Squawka and put together a visual representation of the six prominent scoring locations complete with shots saved data associated.

The first thing we need to establish is what are the areas shots are saved the least and how good keepers are at limiting goals they should. This seems rather silly, as I'm sure we can probably already theorize the likely goal-scoring locales as being the outside marks near the post. However, we still need numbers and we still need to know how good teams are at preventing goals that they often should.

Controlling for difficulty of shot on target by location on the frame at least starts to give us an intelligent understanding of what goal keepers are doing right and what they are doing wrong.